B.F. Skinner (1904-1990)

Operant conditioning follows following principles:

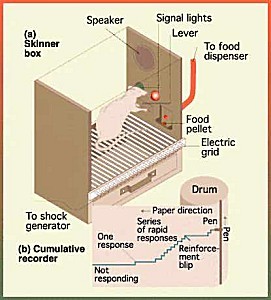

1. The organism is in the process of “operating” on the environment. During this “operating,” the organism encounters a special kind of stimulus, called a reinforcing stimulus, or simply a reinforcer. This special stimulus has the effect of increasing the operant -- that is, the behavior occurring just before the reinforcer. This is operant conditioning: “the behavior is followed by a consequence, and the nature of the consequence modifies the organisms tendency to repeat the behavior in the future.”

2. A behavior followed by a reinforcing stimulus results in an increased probability of that behavior occurring in the future.

3. A behavior no longer followed by the reinforcing stimulus results in a decreased probability of that behavior occurring in the future.

4. Schedules of reinforcement

Changes in behaviour occur by the different schedules of reinforcement. The two schedules are interval and ratio.

Continuous reinforcement is the original scenario: Every time that the rat does the behavior (such as pedal-pushing), he gets a rat goodie.

The fixed ratio schedule was the first one Skinner discovered: If the rat presses the pedal three times, say, he gets a goodie. Or five times. Or twenty times. Or “x” times. There is a fixed ratio between behaviors and reinforcers: 3 to 1, 5 to 1, 20 to 1, etc. This is a little like “piece rate” in the clothing manufacturing industry: You get paid so much for so many shirts.

The fixed interval schedule uses a timing device of some sort. If the rat presses the bar at least once during a particular stretch of time (say 20 seconds), then he gets a goodie. If he fails to do so, he doesn’t get a goodie. But even if he hits that bar a hundred times during that 20 seconds, he still only gets one goodie! One strange thing that happens is that the rats tend to “pace” themselves: They slow down the rate of their behavior right after the reinforcer, and speed up when the time for it gets close.

Skinner also looked at variable schedules. Variable ratio means you change the “x” each time -- first it takes 3 presses to get a goodie, then 10, then 1, then 7 and so on. Variable interval means you keep changing the time period -- first 20 seconds, then 5, then 35, then 10 and so on.

5. Shaping: It is the method of successive approximations.” Basically, it involves first reinforcing a behavior only vaguely similar to the one desired. Once that is established, you look out for variations that come a little closer to what you want, and so on, until you have the animal performing a behavior that would never show up in ordinary life. Skinner and his students have been quite successful in teaching simple animals to do some quite extraordinary things. My favorite is teaching pigeons to bowl!

6. Aversive stimuli

An aversive stimulus is the opposite of a reinforcing stimulus, something we might find unpleasant or painful.

A behavior followed by an aversive stimulus results in a decreased probability of the behavior occurring in the future.

Behavior followed by the removal of an aversive stimulus results in an increased probability of that behavior occurring in the future.

No comments:

Post a Comment